Automated systems making coverage decisions now face intense regulatory scrutiny as federal agencies coordinate enforcement against discriminatory algorithms. Insurance commissioners across 24 states adopted frameworks in 2024 requiring carriers to prove their models don’t discriminate against protected classes. The National Association of Insurance Commissioners reports widespread artificial intelligence deployment across underwriting, pricing, claims, and fraud detection—creating multiple bias risk points. Recent enforcement actions demonstrate growing consequences—SafeRent’s 2024 settlement paid over $2 million for discriminatory tenant screening algorithms, while courts certified class actions against AI vendors whose hiring tools allegedly discriminated by age.

Machine learning models analyzing thousands of data points promise efficiency but carry substantial fairness risks. Algorithms trained on biased historical data produce discriminatory outcomes even without discriminatory intent. Federal agencies warned in April 2024 that automated systems must comply with existing civil rights laws. Colorado pioneered comprehensive testing requirements through Senate Bill 21-169, while New York issued detailed guidance on algorithmic fairness. The regulatory landscape continues evolving rapidly as states adopt varying approaches to AI governance.

Quick Answer: Federal and state regulators now require insurers deploying AI underwriting bias discrimination insurance 2025 systems to conduct bias testing and implement governance frameworks preventing discriminatory outcomes.

Understanding these requirements matters for insurers navigating compliance obligations and consumers seeking protection against AI underwriting bias discrimination insurance 2025 systems.

On This Page

What You Need to Know

- Federal civil rights agencies confirmed existing anti-discrimination laws apply to algorithmic insurance decisions through April 2024 joint enforcement statement

- Colorado mandates quantitative bias testing using statistical methods for life, auto, and health insurers effective through 2025

- New York requires insurers to demonstrate AI systems don’t correlate with protected characteristics or produce unfair outcomes

Understanding AI Underwriting Systems

Modern underwriting relies on algorithms processing vast datasets including credit histories, driving records, purchasing patterns, and social media activity. These systems identify correlations human underwriters might miss by analyzing thousands of variables simultaneously. Traditional methods used actuarial tables and manual review, while current models generate risk scores and pricing recommendations automatically.

Technical architecture introduces multiple discrimination points. Training data reflecting past bias teaches algorithms to perpetuate inequitable patterns. Measurement errors occur when variables inadvertently correlate with protected characteristics. Aggregation problems emerge when models treat diverse populations as uniform groups. Research published in 2024 examining mortgage algorithms found systematic disadvantages for Black and Hispanic applicants even when excluding race explicitly.

The NAIC Innovation and Technology Committee reports insurers increasingly rely on third-party vendors for algorithmic models, creating oversight challenges. Carriers often lack transparency into vendor-supplied algorithm operations yet remain legally responsible for discriminatory outcomes. This vendor reliance complicates compliance as insurers must verify fairness of systems they didn’t develop.

Health insurers deployed an algorithm underestimating care needs for Black patients compared to equally sick white patients. The system used healthcare costs as a health proxy, failing to account for systemic barriers reducing care access among Black patients. This demonstrates how seemingly neutral variables can mask discrimination through proxy effects.

AI Insurance Applications:

| Function | Use Case | Discrimination Risk Level |

|---|---|---|

| Underwriting | External data risk assessment | High |

| Pricing | Predictive model premiums | High |

| Claims | Automated approvals/denials | Medium |

| Fraud Detection | Pattern recognition | Medium |

| Customer Service | Chatbot responses | Low |

Industry faces critical decisions as regulatory expectations crystallize around algorithmic fairness requirements.

Federal Regulatory Framework

Federal oversight operates through multiple agencies enforcing existing civil rights statutes rather than new AI-specific legislation. The April 2024 Joint Statement on Enforcement Efforts Against Discrimination from EEOC, FTC, CFPB, and Civil Rights Division emphasizes that longstanding discrimination laws apply fully to algorithmic decisions. Multi-agency coordination signals heightened enforcement priority government-wide.

The Federal Trade Commission exercises authority under Section 5 addressing unfair and deceptive practices, including discriminatory algorithms. Agency enforcement posture indicates willingness to define algorithmic bias as substantial injury affecting demographic group social standing. This interpretation brings discriminatory AI tools within FTC jurisdiction even without proving individual financial harm.

Title VII, Fair Housing Act, Americans with Disabilities Act, and Age Discrimination in Employment Act prohibit practices creating disparate impact on protected classes regardless of intent. Courts applied these statutes to algorithmic systems, establishing insurers cannot shield discriminatory outcomes behind neutral technology claims. The 2025 Mobley v. Workday decision certified collective action against an AI vendor whose hiring algorithms allegedly discriminated by age, establishing vicarious liability extending to insurance underwriting vendors through professional liability coverage for tech companies.

Congressional efforts gained momentum with Senator Markey’s September 2024 Artificial Intelligence Civil Rights Act prohibiting algorithmic systems causing discriminatory disparate impact. Representative Lee introduced the Eliminating Bias in Algorithmic Systems Act requiring federal agencies using AI to establish civil rights offices. Neither passed in 2024 but signal legislative direction informing agency enforcement priorities.

The Federal Insurance Office released its January 2025 Algorithmic Bias in Insurance study examining how automated systems affect coverage access and pricing across property-casualty, life, and health markets. Analysis documented systematic disparities in denials and premiums correlated with protected characteristics even in algorithms excluding demographic variables directly. Recommendations included enhanced state coordination and standardized testing methodologies.

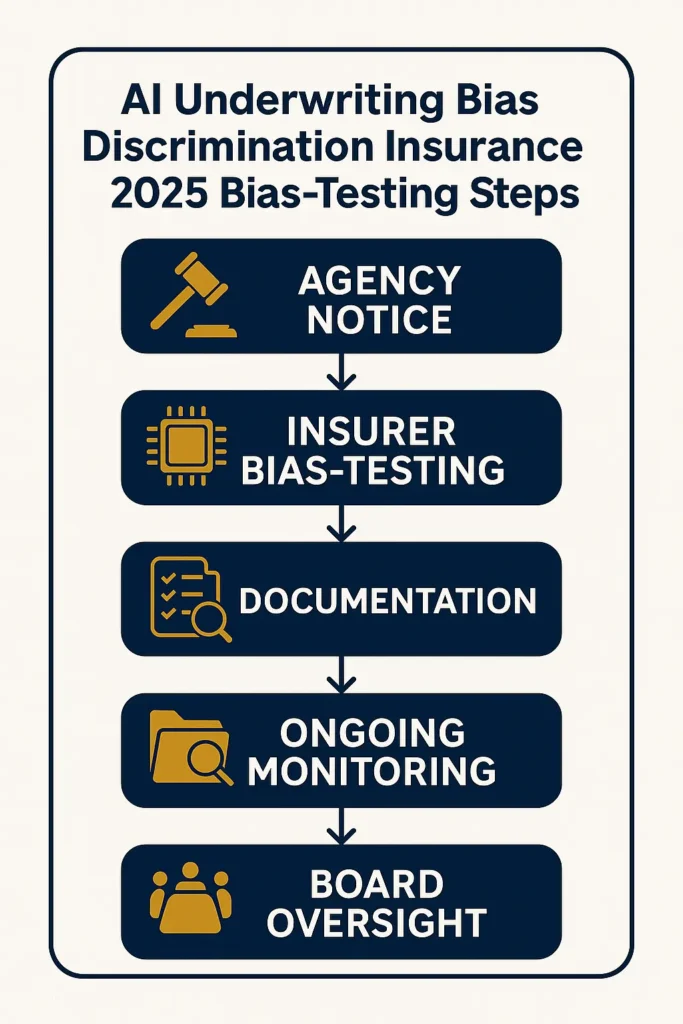

Federal agencies established clear insurer obligations: conduct pre-deployment bias testing using appropriate statistical methods, implement ongoing monitoring detecting model drift and emerging patterns, maintain compliance documentation, exercise third-party vendor oversight, provide consumer transparency about algorithmic decisions, and establish board-level governance accountability.

State-Level Variations

State insurance departments emerged as primary algorithmic bias regulators, adopting divergent approaches creating complex compliance landscapes. Colorado pioneered comprehensive regulation through Senate Bill 21-169, signed July 2021, prohibiting insurers from using external consumer data, algorithms, or models resulting in unfair discrimination based on protected characteristics.

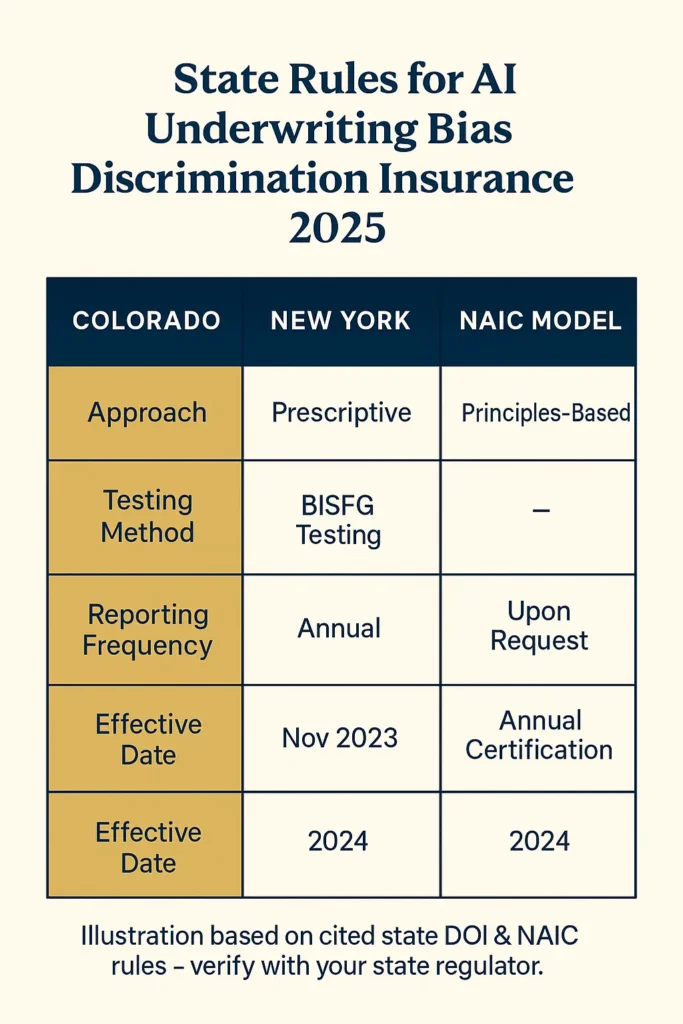

Colorado’s Regulation 10-1-1 for life insurance, effective November 2023, requires carriers using external data to establish governance frameworks including cross-functional oversight committees, risk assessment rubrics, and comprehensive model inventories. Life insurers submitted progress reports by June 2024 and full compliance documentation by December 2024. The regulation mandates quantitative testing using Bayesian Improved Surname Geocoding detecting disparate racial and ethnic impact, with significant disparities triggering corrective action.

New York’s Department of Financial Services issued Circular Letter No. 7 in 2024 establishing AI and external consumer data expectations. Requirements demand insurers demonstrate data sources and models don’t use or correlate with protected characteristics and don’t produce unfairly discriminatory outcomes. Unlike Colorado’s prescriptive methodology, New York maintains principles-based oversight allowing insurer flexibility while reserving examination authority.

The NAIC Model Bulletin on the Use of Artificial Intelligence Systems by Insurers, adopted December 2023, provides a template 24 states adopted by mid-2025. The bulletin requires insurers to implement AI Programs including governance structures, risk controls, documentation protocols, and vendor oversight. Maryland, Alaska, New Hampshire, Connecticut, and 20 additional states adopted versions with varying dates and minor customizations.

State Regulation Comparison:

| State | Type | Effective | Testing Required | Reporting Frequency |

|---|---|---|---|---|

| Colorado | Prescriptive | Nov 2023 | BIFSG quantitative | Annual compliance |

| New York | Principles | 2024 | Insurer-designed | Upon examination |

| Connecticut | NAIC Model | 2024 | Governance framework | Annual certification |

| California | Pending | TBD | Healthcare restrictions | TBD |

Three regulatory models emerge. Colorado’s prescriptive framework mandates specific methodologies and thresholds, providing clarity but limiting flexibility. New York’s principles-based approach establishes expectations while allowing carriers to design compliance measures, risking inconsistent application. NAIC Model adopters create baseline governance without detailed protocols, relying on examinations assessing adequacy.

Colorado enacted SB24-205, Consumer Protections for Artificial Intelligence Act, effective February 2026, requiring high-risk AI developers and deployers to conduct discrimination impact assessments and provide consumer appeal rights. California introduced AB 2930 in 2024 targeting consequential decision discrimination, though the bill failed. These broader frameworks interact with insurance-specific regulations under comprehensive business insurance requirements creating layered obligations.

Testing methodology challenges persist. Colorado’s BIFSG method infers race and ethnicity from names and addresses, raising accuracy concerns. Alternatives including blind testing, counterfactual analysis, and distribution matching carry methodological limitations. The National Fair Housing Alliance’s April 2024 mortgage underwriting report recommended distribution matching potentially informing future standards.

Impact on Policyholders

Algorithmic bias produces tangible harms beyond pricing disparities including coverage denials, reduced limits, and steering toward less favorable products. Research reveals systematic patterns affecting Black, Hispanic, disabled, female, and older consumers.

Marcus, 58, Chicago Applied for 15 positions using AI recruiting platforms scoring resumes before human review. Despite 30 years experience, received 14 rejections without interviews. After filing complaints, discovered the algorithm systematically down-ranked applicants over 50, costing an estimated $85,000 in lost wages during six-month unemployment. Lesson: Automated systems create invisible age-based barriers requiring regulatory oversight and consumer appeal rights preventing discrimination.

Tamara, 42, Atlanta Sought rental housing using property management platforms automating tenant screening. The algorithm assigned a score resulting in eight apartment rejections. Analysis revealed the model produced disparate impact on Black and Hispanic applicants through criminal records and eviction filings disproportionately affecting these groups. She incurred $2,400 in extended hotel costs searching for housing. Lesson: Screening algorithms using criminal justice data perpetuate systemic racial discrimination even without explicitly considering race.

Jennifer, 36, Denver Received health coverage for chronic conditions requiring regular monitoring. After her insurer implemented AI care management algorithms, she experienced systematic specialist referral denials physicians deemed medically necessary. Internal analysis revealed the algorithm underestimated care needs for her demographic by using costs as health proxies, failing to account for access barriers. She paid $6,700 out-of-pocket for denied services. Lesson: Healthcare algorithms using cost proxies systematically underprovide care to populations facing access disparities.

Protected classes face discrimination through proxy variables correlating with protected characteristics. Algorithms excluding race still discriminate by incorporating credit scores, ZIP codes, education, or purchasing behaviors correlating with racial demographics. This indirect discrimination appears neutral while producing disparate outcomes.

Consumer protection challenges intensify because decisions lack transparency. Applicants denied coverage or charged higher premiums cannot determine whether discrimination occurred or understand driving factors. The NAIC Model Bulletin requires insurers to provide AI use information yet stops short of mandating individual decision explanations. Privacy concerns and proprietary algorithm protection create transparency tensions.

Cumulative algorithmic bias effects compound existing socioeconomic disparities. Insurance coverage affects financial services access, housing, healthcare, and employment—creating cascading consequences when biased algorithms restrict protected group access. Analysis found denials based on algorithmic assessments disproportionately affected minority neighborhoods, perpetuating residential segregation and limiting economic mobility.

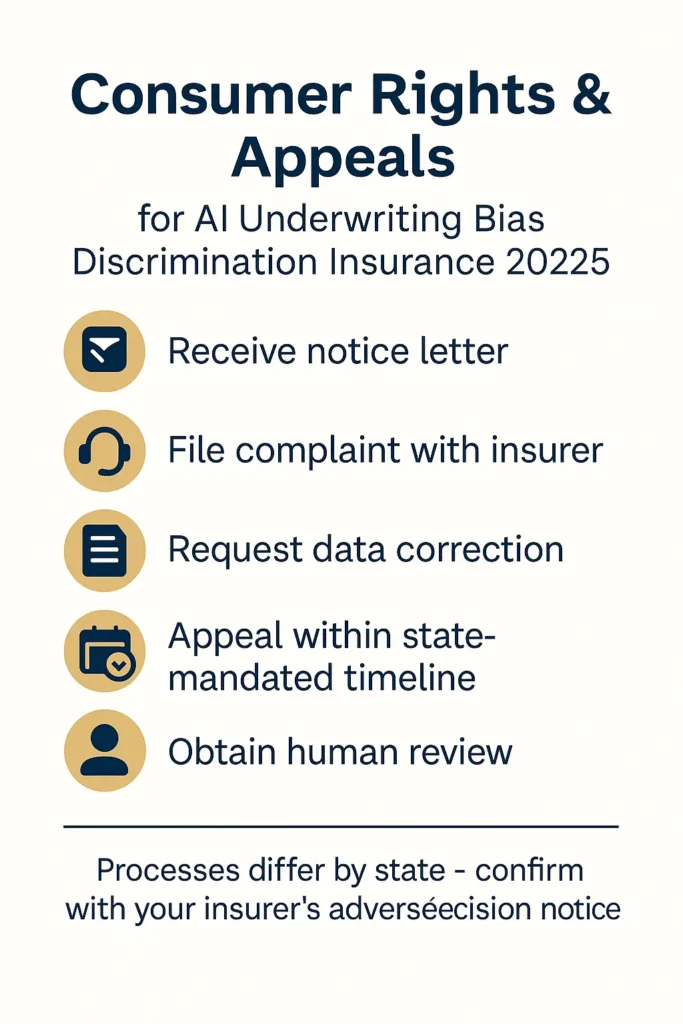

Regulations increasingly mandate consumer protections. Colorado requires insurers to establish complaint processes, provide AI use notices, and offer adverse decision appeals. New York emphasizes transparency obligations and data correction rights. California’s 2024 healthcare legislation prohibits coverage denials made solely by AI without human oversight, establishing precedent other states may follow regarding state insurance compliance standards.

Advocates stress preventing algorithmic discrimination requires proactive bias testing rather than reactive complaints. The Leadership Conference on Civil and Human Rights noted marginalized communities often lack resources identifying and challenging bias, making regulatory oversight essential.

Frequently Asked Questions – AI Underwriting Bias Discrimination Insurance 2025

What is the AI regulation in 2025?

Regulation operates primarily at state level with 24 states adopting the NAIC Model Bulletin requiring governance programs for AI systems. Colorado leads with comprehensive testing under SB21-169, mandating quantitative bias analysis for life, auto, and health algorithms. New York established principles-based fairness expectations. Federal agencies coordinate enforcement of existing civil rights laws through April 2024 joint guidance, though Congress hasn’t enacted AI-specific federal insurance legislation. Colorado’s SB24-205, effective February 2026, creates broader consumer protections for high-risk systems beyond insurance.

Is AI going to replace insurance underwriters?

Artificial intelligence transforms rather than eliminates underwriting roles. Algorithms increasingly handle routine risk assessment and data processing, but human underwriters remain essential for complex cases, judgment calls, compliance, and bias oversight. The NAIC Model Bulletin explicitly requires human accountability for AI-generated consumer decisions. Research indicates AI performs poorly on perception, common sense, and contextual reasoning tasks fundamental to underwriting. Most insurers deploy AI as decision-support tools augmenting rather than replacing expertise. The shift creates demand for underwriters with data science skills validating outputs and identifying discriminatory patterns.

How are mortgage lenders thinking about AI in 2025?

Mortgage lenders approach AI cautiously following enforcement actions and research documenting algorithmic lending bias. The Consumer Financial Protection Bureau and Justice Department actively examine lending algorithms for Fair Housing and Equal Credit Opportunity violations. Lenders implementing AI must conduct disparate impact testing similar to insurance requirements. The National Fair Housing Alliance’s 2024 report recommends distribution matching identifying and correcting discrimination. Many lenders employ human-in-the-loop systems where AI provides recommendations loan officers review before final decisions, balancing efficiency with accountability. Regulatory uncertainty around acceptable testing makes lenders cautious about fully automated underwriting related to cybersecurity and data governance requirements.

What are the main sources of algorithmic bias in insurance?

Bias stems from multiple sources throughout machine learning lifecycles. Historical bias occurs when training data reflects past discrimination, teaching algorithms to perpetuate inequitable patterns. Representation bias emerges when data overrepresents some populations while underrepresenting others, causing poor performance for underrepresented groups. Measurement bias results from defining variables correlating with protected characteristics—like using healthcare costs proxying health needs. Aggregation bias happens when algorithms treat diverse populations homogeneously, failing to account for within-group variation. Learning and evaluation bias occur during development and testing when developers inadequately assess fairness.

How do insurers test algorithms for discrimination?

Colorado’s life insurance regulation mandates Bayesian Improved Surname Geocoding inferring race and ethnicity from names and addresses to test for disparate demographic outcomes. Insurers must demonstrate differences in approvals, pricing, or outcomes don’t reach statistical significance indicating unfair discrimination. New York allows insurers designing testing approaches proving algorithms don’t use protected characteristics or produce discriminatory patterns. Best practices include blind testing with synthetic data, counterfactual analysis examining how identical applicants with only demographic differences would be treated, and distribution matching comparing algorithmic outcomes to population distributions. Independent third-party audits provide additional validation.

What rights do consumers have when AI systems deny coverage?

Rights vary by state but are expanding under new regulations. Colorado requires insurers to provide notice when AI makes consequential decisions, establish complaint processes, offer adverse decision appeals, and allow inaccurate personal data correction. New York’s circular emphasizes AI use transparency and data correction rights. The NAIC Model Bulletin requires insurers to inform consumers about AI use and adverse outcomes, though specific appeal procedures vary by state. California’s 2024 healthcare legislation mandates human review for AI-generated coverage denials. Federal civil rights laws provide private action rights allowing consumers suing insurers for discriminatory algorithmic decisions under applicable statutes.

Are there penalties for insurers using discriminatory algorithms?

State departments can impose penalties including fines, license suspensions, and corrective action orders against insurers deploying discriminatory algorithms. Colorado requires immediate unfair discrimination remediation upon detection, with noncompliance potentially resulting in enforcement action. Federal agencies pursue cases under civil rights statutes carrying significant financial penalties. The 2024 SafeRent settlement paid over $2 million resolving Fair Housing claims regarding discriminatory tenant screening algorithms. The 2025 Mobley v. Workday class certification establishes precedent that AI vendors face vicarious liability for discriminatory systems, expanding potential defendants beyond insurers themselves. Industry observers anticipate enforcement increasing as regulators develop algorithmic examination expertise.

Can third-party AI vendors be held liable for insurance discrimination?

Recent developments establish third-party AI vendors face potential liability for discriminatory algorithms they provide insurers. The Mobley v. Workday decision held vendors could be liable as employers’ agents using their systems, rejecting arguments only final decision-making entities face discrimination liability. This precedent extends to insurance contexts where insurers deploy vendor-supplied algorithms. The NAIC Model Bulletin requires insurers maintaining oversight and control of third-party AI, establishing vendor management expectations. Contract provisions should allocate bias testing, monitoring, and remediation responsibilities. The emerging framework treats algorithmic discrimination as shared responsibility between vendors and deployers rather than allowing vendors escaping liability through contractual limitations.

Key Takeaways

Addressing algorithmic discrimination in automated underwriting requires coordinated action across regulatory, technological, and governance dimensions as commissioners establish frameworks balancing innovation with consumer protection.

Key Insights:

- Automated insurance systems perpetuate historical discrimination through biased training data, proxy variables correlating with protected characteristics, and inadequate fairness testing during development phases

- Federal and state regulations targeting AI underwriting bias discrimination insurance 2025 systems expand rapidly, with 24 states adopting NAIC Model Bulletin and Colorado implementing comprehensive bias testing requirements

- Insurers must implement governance frameworks including board oversight, risk management programs, algorithm inventories, ongoing bias monitoring, and third-party vendor controls satisfying regulatory expectations

- Quantitative bias testing methodologies remain imperfect, with Bayesian Improved Surname Geocoding raising accuracy concerns while alternative approaches like distribution matching gain regulatory attention

- Consumer protections including transparency notices, appeal rights, and data correction processes vary significantly across states, creating compliance complexity for multi-state insurers operating with algorithmic underwriting systems

Disclaimers

This guide provides educational information only and does not constitute professional insurance, legal, or financial advice.

Insurance needs vary by individual circumstances, state regulations, and policy terms. Consult licensed professionals before making coverage decisions.

Information accurate as of October 2025. Insurance regulations and products change frequently. Verify current details with official sources and licensed agents.